Python Code

from requests import Request, Session

from requests.exceptions import ConnectionError, Timeout, TooManyRedirects

import json

import os

url = 'https://pro-api.coinmarketcap.com/v1/cryptocurrency/listings/latest'

parameters = {

'start':'1',

'limit':'5000',

'convert':'USD'

}

headers = {

'Accepts': 'application/json',

'X-CMC_PRO_API_KEY': 'Insert your API Key',

}

session = Session()

session.headers.update(headers)

try:

response = session.get(url, params=parameters)

data = json.loads(response.text)

print(data)

except (ConnectionError, Timeout, TooManyRedirects) as e:

print(e)

# NOTE: IOPub Data Rate Error

# Go in and put "jupyter notebook --NotebookApp.iopub_data_rate_limit=1e10"

into the Anaconda Prompt to change this to allow to pull data

pd.set_option('display.max_columns', None)

df1 = pd.json_normalize(data['data'])

df1['time_stamp'] = pd.to_datetime('now')

# Writing a Function to Run the API

def api_runner():

url = 'https://pro-api.coinmarketcap.com/v1/cryptocurrency/listings/latest'

parameters = {

'start':'1',

'limit':'5000',

'convert':'USD'

}

headers = {

'Accepts': 'application/json',

'X-CMC_PRO_API_KEY': 'Insert your API Key',

}

session = Session()

session.headers.update(headers)

try:

response = session.get(url, params=parameters)

data = json.loads(response.text)

#print(data)

except (ConnectionError, Timeout, TooManyRedirects) as e:

return

#print(e)

df1 = pd.json_normalize(data['data'])

df1['time_stamp'] = pd.to_datetime('now')

if not os.path.isfile(r"C:\Users\API.csv"):

df1.to_csv(r"C:\Users\API.csv", header = 'column_names')

else:

df1.to_csv(r"C:\Users\API.csv",mode = 'a', header = False)

# Automating the API Runner Function to Run Every 10 Minutes

import os

from time import sleep

from datetime import datetime

import pytz

def is_market_open():

est = pytz.timezone('US/Eastern')

now = datetime.now(est)

market_open = now.replace(hour=9, minute=30, second=0, microsecond=0)

market_close = now.replace(hour=16, minute=0, second=0, microsecond=0)

return market_open <= now <= market_close

for i in range(400):

if is_market_open():

api_runner()

print('API Runner Completed Successfully')

else:

print('Market Closed. Skipping API Runner.')

sleep(600) # Sleep for 10 minutes

exit()

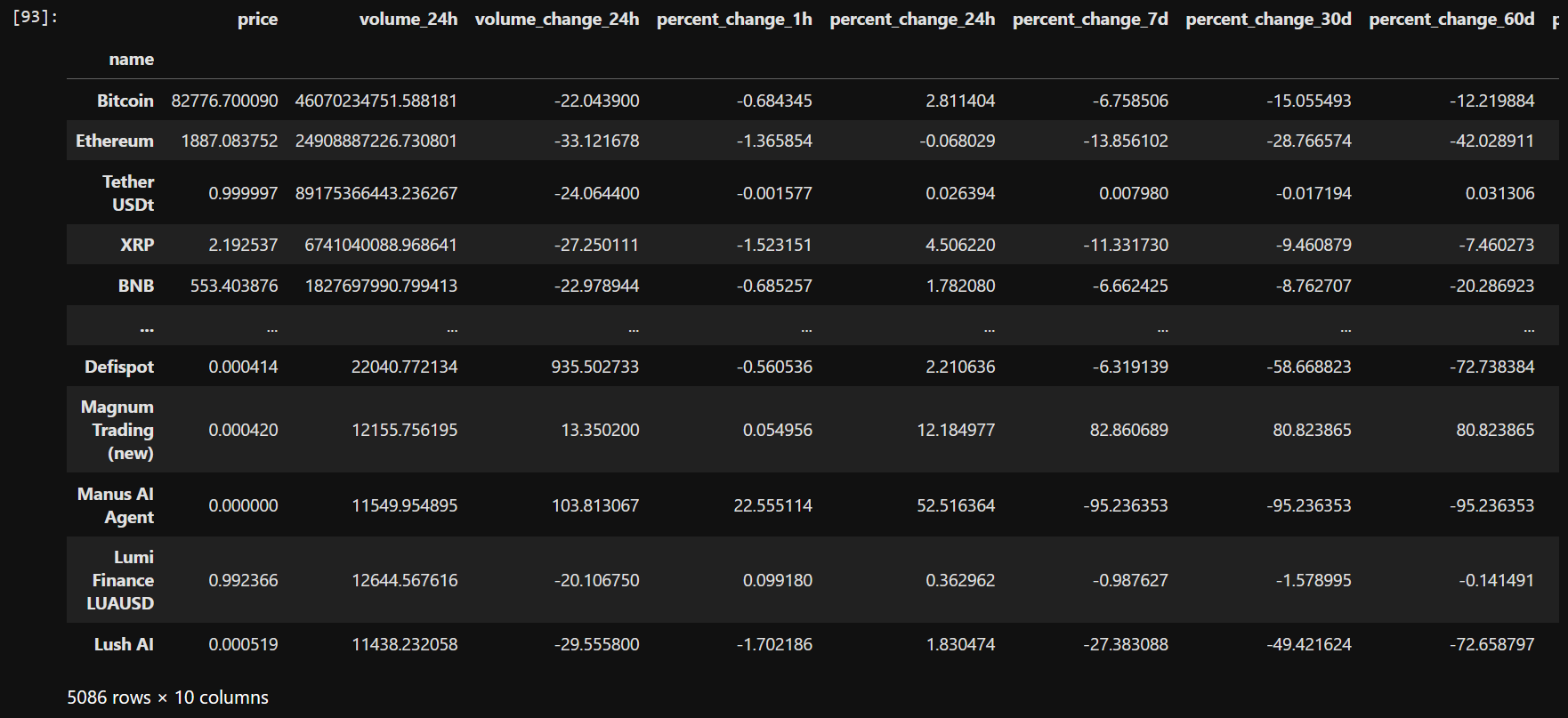

Data Cleaning With Pandas

import pandas as pd

df1 = pd.read_csv (r"C:\Users\API.csv")

df1 = df1.drop_duplicates()

df1 = df1.drop(columns = ["slug", "num_market_pairs", "date_added", "tags","max_supply",

"Unnamed: 0"])

pd.set_option('display.float_format', lambda x: '%5f' % x)

df2 = df1[['name', 'symbol', 'quote.USD.price', 'quote.USD.volume_24h',

'quote.USD.volume_change_24h', 'quote.USD.percent_change_1h',

'quote.USD.percent_change_24h', 'quote.USD.percent_change_7d',

'quote.USD.percent_change_30d', 'quote.USD.percent_change_60d',

'quote.USD.percent_change_90d', 'quote.USD.market_cap']]

df2.columns = ['name', 'symbol', 'price', 'volume_24h', 'volume_change_24h',

'percent_change_1h', 'percent_change_24h', 'percent_change_7d',

'percent_change_30d', 'percent_change_60d', 'percent_change_90d',

'market_cap']

df3 = df2.groupby('name', sort = False)[['price', 'volume_24h',

'volume_change_24h', 'percent_change_1h',

'percent_change_24h', 'percent_change_7d',

'percent_change_30d', 'percent_change_60d',

'percent_change_90d', 'market_cap']].mean()

df3.to_csv(r'C:\Users\Panda Cleaned Crypto Data.csv', index=False)

df3_stacked = df3.stack() #Optional Step to Pivot Data